Artificial Intelligence (AI) is rapidly transforming industries, but one major challenge remains—its lack of transparency. Many machine learning (ML) models, particularly deep learning networks, function as “black boxes,” making decisions that even their creators struggle to interpret. Explainable AI (XAI) addresses this issue by making AI systems more understandable, accountable, and trustworthy.

In this blog, we’ll explore the importance of XAI, its key techniques, real-world applications, and the future of explainable AI.

Why is Explainable AI Important?

1. Trust & Accountability

For AI to be widely adopted, users must trust its decisions. XAI provides insights into how models make predictions, fostering confidence among stakeholders.

2. Regulatory Compliance

Laws like the GDPR (General Data Protection Regulation) in Europe require companies to explain AI-driven decisions, especially in sensitive areas like finance and healthcare.

3. Bias & Fairness Detection

AI models can inadvertently develop biases. XAI helps in identifying and mitigating discriminatory patterns, making AI fairer.

4. Debugging & Performance Improvement

Understanding why an AI model makes certain predictions helps data scientists and engineers fine-tune algorithms for better accuracy and efficiency.

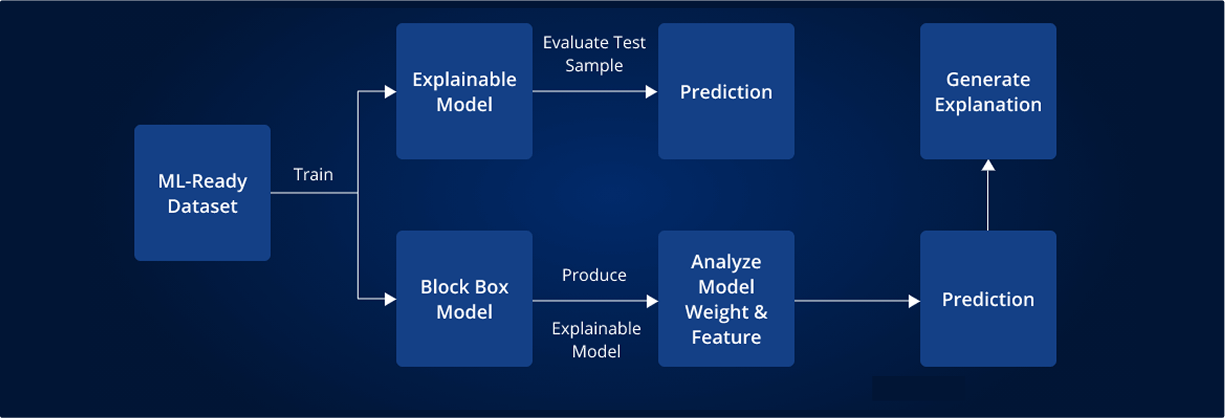

Key Techniques in Explainable AI

Several techniques help make AI models more interpretable. Here are the most common ones:

1. Feature Importance Analysis

- Identifies which input features (e.g., customer income, age) had the most impact on a model’s decision.

- Used in decision trees, random forests, and gradient boosting models.

2. SHAP (Shapley Additive Explanations)

- Provides a detailed breakdown of how each feature contributes to a prediction.

- Works well with complex ML models like neural networks.

3. LIME (Local Interpretable Model-Agnostic Explanations)

- Generates local approximations of black-box models to explain individual predictions.

- Commonly used in fraud detection and healthcare diagnostics.

4. Counterfactual Explanations

- Answers “What if?” questions by showing how slight input changes affect model predictions.

- Useful in loan approval and hiring decisions.

5. Attention Mechanisms in Neural Networks

- Highlights which parts of input data (e.g., specific words in a text) influenced an AI model’s decision.

- Popular in NLP (Natural Language Processing) models like ChatGPT and BERT.

Real-World Applications of Explainable AI

1. Healthcare

- AI-driven diagnostics: Doctors need to understand how an AI model predicts diseases like cancer. XAI makes it possible.

- Medical imaging: AI highlights regions in X-rays or MRIs that indicate abnormalities.

2. Finance & Banking

- Loan approvals: Banks use AI to assess creditworthiness. XAI ensures transparency in why a loan was approved or denied.

- Fraud detection: Helps financial institutions understand why transactions are flagged as fraudulent.

3. Autonomous Vehicles

- Self-driving cars rely on AI to make real-time decisions. XAI helps ensure these decisions are safe, explainable, and bias-free.

4. Human Resources & Hiring

- AI-driven hiring tools analyze resumes and rank candidates. XAI ensures hiring decisions are fair and unbiased.

Challenges in Explainable AI

1. Complexity vs. Interpretability

- Simpler models (like linear regression) are easy to explain but less powerful.

- More complex models (like deep learning) are highly accurate but difficult to interpret.

2. Trade-off Between Performance & Transparency

- Making AI models explainable can sometimes reduce their accuracy. Balancing these factors is a challenge.

3. Lack of Standardization

- Different industries require different levels of AI explainability, making standardization difficult.

Future of Explainable AI

As AI adoption grows, XAI will become a fundamental requirement across industries. Here’s what to expect:

- Regulations will enforce AI transparency in critical sectors like healthcare, finance, and law enforcement.

- AI models will be designed with explainability in mind rather than as an afterthought.

- Hybrid AI systems that combine interpretable models with deep learning will become more common.

The ultimate goal? Making AI as understandable as human decision-making.

Conclusion

Explainable AI (XAI) is crucial for building trust, ensuring fairness, and improving AI systems. As AI becomes a part of our daily lives, transparency and accountability will be non-negotiable. By embracing XAI, businesses and researchers can create ethical, reliable, and high-performing AI solutions.