Artificial Intelligence (AI) systems are increasingly woven into the fabric of our daily lives—deciding what news we see, screening job applications, approving loans, and even guiding medical diagnoses. While these systems promise efficiency and innovation, they are not infallible. When AI systems make mistakes—such as biased hiring, wrongful denial of services, or flawed medical recommendations—the consequences can be severe. This raises a critical question: who is responsible when AI gets it wrong?

Algorithmic accountability is about defining, regulating, and enforcing responsibility in the age of intelligent systems.

Understanding the Problem of AI Errors

AI systems do not exist in isolation. They are designed, trained, and deployed by people, organizations, and governments. Errors can occur for several reasons:

-

Biased Training Data: When historical data reflects inequality, AI often replicates those patterns.

-

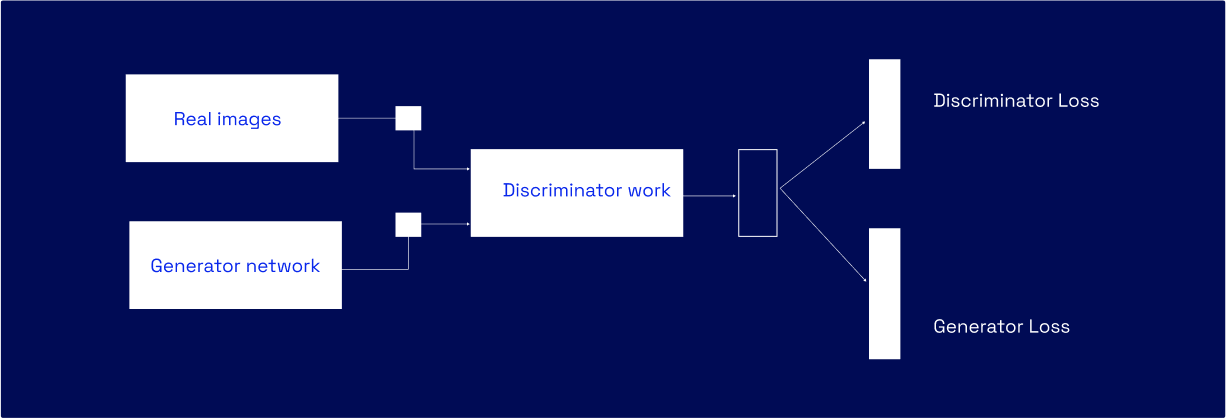

Opaque Decision-Making: Deep learning models can function as “black boxes,” making it difficult to explain decisions.

-

Poor Deployment Practices: AI tools misused in the wrong context may cause unintended harm.

-

Lack of Oversight: Insufficient governance allows errors to go unchecked, compounding their impact.

These factors blur the line of responsibility. Is the fault with the developers, the companies deploying the system, or the regulators who failed to enforce oversight?

Who Holds the Responsibility?

Developers and Data Scientists

Developers play a crucial role in designing and testing AI. If an algorithm is poorly trained or inadequately tested, accountability should rest with its creators. Ethical development practices, such as using diverse datasets and stress-testing models, are essential.

Organizations and Businesses

Companies deploying AI systems bear significant responsibility. They decide how and where to use AI, and they must ensure compliance with ethical and legal standards. When an AI-driven system makes harmful decisions, businesses cannot shift all blame to the technology itself.

Governments and Regulators

Regulation is increasingly vital to ensure accountability. Governments must establish frameworks for AI auditing, certification, and redress mechanisms. For example, the European Union’s AI Act is a step toward classifying AI systems by risk and mandating transparency.

Users and Society

Even end-users share some responsibility in how AI systems are adopted. Blind reliance without questioning can lead to over-dependence on automated systems. Educating society on AI’s limitations is part of shared accountability.

Building Accountability into AI Systems

Accountability is not just about assigning blame after harm occurs—it is about designing systems and governance structures that prevent harm in the first place. Key strategies include:

-

Explainable AI (XAI): Making decisions transparent and understandable.

-

Bias Audits and Testing: Regularly testing AI for discriminatory outcomes.

-

Ethical Guidelines and Standards: Industry-wide codes of conduct to promote fairness and responsibility.

-

Clear Legal Frameworks: Regulations that define liability and protect affected individuals.

-

Human-in-the-Loop Systems: Ensuring critical decisions involve human oversight.

Case Studies in Algorithmic Accountability

-

Hiring Bias: A major tech company abandoned its AI recruitment tool after it was found to discriminate against women. Accountability fell on the developers and the organization that deployed it without proper checks.

-

Healthcare Misdiagnosis: AI misinterpretation of medical scans has raised debates about whether responsibility lies with the software provider, the hospital, or the physician who relied on the tool.

-

Financial Algorithms: Biased credit-scoring algorithms that denied loans to minority groups sparked lawsuits, emphasizing the role of financial institutions in oversight.

These cases highlight the shared, multi-layered nature of accountability.

Conclusion

AI is not inherently neutral—it reflects the data, decisions, and contexts in which it is built and deployed. Algorithmic accountability requires a collaborative effort from developers, businesses, regulators, and society at large. By embedding transparency, ethical standards, and governance into AI systems, we can ensure that when AI gets it wrong, there is a clear path to responsibility and redress.

The future of AI depends not only on innovation but also on the trust society places in these systems. Accountability is the foundation of that trust.